Data mining is a field that implies analyzing large data sets in order to discover new patterns and methods for database management, data processing and inference considerations.

Download Weka Crack + Serial

Weka is a package that offers users a collection of learning schemes and tools that they can use for data mining. The algorithms that Weka provides can be applied directly to a dataset or your Java code.

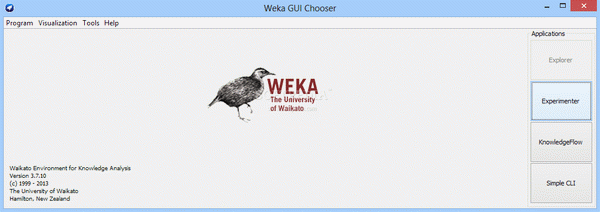

When running the program, you can view four available applications that you can access: 'Explorer', 'Experimenter', 'KnowledgeFlow' and 'Simple CLI'.

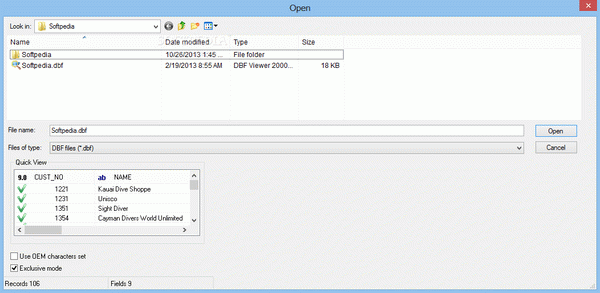

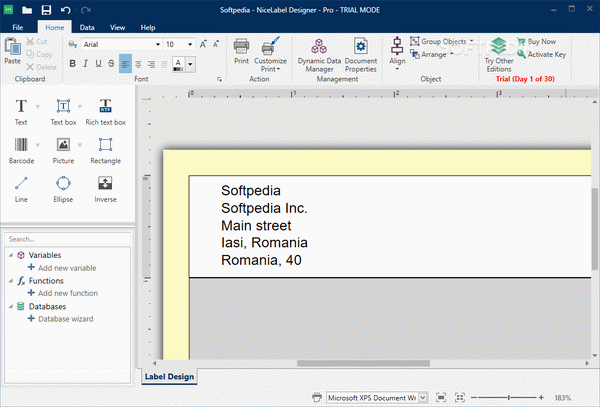

The first section allows you to open a dataset or a database and edit it as you wish. You can filter the data contents, change the attributes and visualize the result in a bar chart. Also, you can classify the available data according to a predefined set of rules, as well as perform a complete cost / benefit analysis that automatically displays the cost matrix and the threshold curve.

In addition to this, the program also includes tools for data clustering, association rules and attributes evaluator. Furthermore, you can use it for data plotting, as it allows you to view and analyze point graphs for each possible attribute combination.

The program is also suitable for developing new machine learning schemes. You just have to configure your experiment by choosing its type: classification or regression. Also, you have to choose the desired dataset and algorithm and then you can run it. The results can be saved either in ARFF or CSVformats or as a JDBC database.

Also, you can analyze and test a data file. The program allows you to choose the significance and the comparison field, as well as the sorting criteria and the test base.

Weka is an easy to use application, yet it is designed for those who are familiar with data mining procedures and database analysis. Using this software, you can view and analyze ARFF data files, as well as perform data clustering and regression.

Weka Review

Read morehi I'm Brandon I made this video for first-time and beginner users of a data mining program called Weka so you've already got your data file for Weka and are for a CSV file probably and you want to get a little familiar with Weka using some of those popular algorithms you've heard about like logistic regression decision tree neural network and support vector machine and you just simply want to get your feet wet with Weka you can get to the initial screen in Weka by opening your data file and now you'd find it helpful to see examples of data pre-processing building models and interpreting results in Weka well at the risk of redundancy this demo is only for first-time or beginner users of Weka it's barely even going to scratch the surface you won't be able to apply even the intermediate data mining techniques that web goes based on after watching this but when I learn brand new things I like to start with analogies examples and even the simplest of demonstrations at this point I may tell you what go is actually not complicated given what it can do how it's very straightforward and easy to use but if you're like me that won't resonate until you see some do-it-yourself Weka examples and exercises let's get started now data mining with Weka so you've probably already seen this initial screen when you open your data set and wicka Explorer well helpful screen showing you some things about the data set it most certainly is not why people use Weka the classified tab is where it most commonly does its thing and will contain the for algorithms I'll demo which again our logistic regression decision tree neural network and support vector machine I'll run my data in Weka using logistic regression in a moment but would like to say one less thing in opening so many people learning Weka have systems in mind for improved decision-making the knowledge for such systems can be based on thinking people as well as thinking machines thinking machines is what Weka does best WEP is a collection of quote unquote machine learning algorithms machine learning is a form of artificial intelligence artificial intelligence is a form of computer science with software capable of self modification programs capable of changing themselves programs capable of improving themselves let's say I want to train a computer to train itself to play chess maybe I'll have it do a logistic regression algorithm in Weka let's say I want my data set to dispense live personalized advice resembling and resembling Amazon's customers who buy x also buy y maybe I'll have it do a nearest neighbor algorithm in Weka this demo won't emphasize weapons machine learning artificial intelligence approach to knowledge discovery it may feel more so like deriving knowledge from classical statistical techniques Weka can do human learning or thinking people data analysis as well that's mostly how I got familiar with Weka and that's mostly what I'm about to show last thought I promise before we start is that if you haven't heard of a hybrid knowledge system for decision-making or understood where knowledge based on machines that learn to think begins I hope after watching this you'll be able to visualize the Weka toolkit and how machine based advice in these knowledge systems actually started interpreting data very briefly let me show you the data that I used when I got my feet wet with Weka which I use for this demo it's basic info about my heart over 20 days I collected four measurements per day using a chest belt so it's 80 measurements each measurement has seven data points associated with it I was not trying to use this data set in Weka to discover useful knowledge I chose this data set because I collected it I had already studied it from many angles and since I was familiar with each measurement and metric it helped my Weka learning curve for example a seemingly simple variable confused that j48 decision tree algorithm but I knew the data and immediately knew why so let's look at the graph red indicates a successful grade blue indicates an unsuccessful grade the first two data points were numbers that the chest out device gave me as is as we can see for the first metric heart rate variability there was a direct relationship higher is better as we can see for the second metric heart beats per minute there was an indirect relationship lower is better the other five data points were not given to me as is that's where you the Data Miner put on your thinking cap to decide how to tweak your data and provide Weka capability of discovering useful information this is part of pre-processing and not editorialized but if you have initiative and aptitude for data pre-processing techniques you'll be effective at data mining with Weka the third metric de ID was the day 1 through 20 that I took the heart measurement on the 4th metric sequence ID was whether it was that day's 1st 2nd 3rd or 4th measurement the fifth metric pattern sleep was if the measurement was taken when my sleep pattern was irregular or regular in the first 40 measurements sleep time was very erratic in the last 40 measurements sleep times were very similar the sixth metric hours awake was hours awake at time of measurement met I measured when I woke up two to five hours after that two to five hours after that and two or more hours after that before I went to sleep and the seventh metric health grade was the after mentioned success or failure grade measurements with heart rate variability of 70 point zero or above were considered successful and below 70 point zero or were considered unsuccessful to see what the data set may look like in a spreadsheet program let's click Edit scrolling down we see all 80 measurements or as they're called in Weka instances on top for each instance we see all seven of what are called in Weka attributes but this is the kind of terminology you don't care about until you see Weka in action let's start with logistic regression in Weka classify choose functions logistic in some cases it may not let you at first if so don't sweat it later on that's one of the things we're going to learn about Weka you may be wondering what logistic regression is but not able to understand what it says when you right-click for its properties if so it's a highly regarded classical statistical technique for making predictions if you get good in Weka tomorrow you may want to train a logistic regression formula so it can learn with increasing accuracy to predict something using Weka for the first time I remember just wanting to see a basic example so with 80 past events to go off of and each event had up to seven attributes I pictured a CEO asking me how accurately if I had only the first and sixth attribute logistic regression could predict the seven that is when the 81st event happens how accurately can it predict seven just based on how one and six related to one another the first 80 times so next I was going to click the other attributes remove them from the data set and run the logistic regression just with one six and seven since I was learning work for the first time however I figured why not also run logistic regression with all the data points we've got with a logistic regression formula more accurately predict with two or six data points I ran logistic regression twice along the way learn some introductory Weka concepts and classify I looked at the pulldown menu and so that's where you can put what you're predicting the CEO asked me to predict the health grade attribute I also thought what if the CEO asked me to predict another attribute like heart rate variability I click start and so it said can't do numeric classes here it's grayed out but when I did it it was an error and it said cannot handle this type of class so in Weka when you get your feet wet this is one of the first things you'll notice any algorithm cannot predict any data point this logistic regression algorithm won't predict numeric class data but it will predict nominal class data next I asked myself which to use and test options use training set would have run logistic regression on the data file as is since I'm coming up with a predictive model though I ended up going with 10-fold cross-validation instead as it challenges the logistic regression a bit more and makes its ability to accurately predict ultimately more valid what I mean by that is in if that CEO knows some statistics and wants to take my predictive analysis seriously I may be asked when testing my formula if I ran it through any cross-validation later we'll take a closer look at what that means so relating all these six data attributes a heart rate score another heart rates for the day number things like that let's see what the first logistic regression formula starts out us to run logistic regression and classify start and on top we see the formula it's taken the six attributes and assigned a weight to each of them so with all six logistic regression initially predicted with ninety two and a half percent accuracy as I interpreted the data I looked on bottom at the confusion matrix and see it gets tripped up more on the successful where it's 32 out of 36 correct and the unsuccessful where it's 42 out of 44 correct now let's see if we go back and only use those two data points if that helps or hurts this logistic regression algorithm in its prediction accuracy we click the data points we don't need and click remove so this was my original formula we have here two independent variables and based on how they relate to each other we're going to see how close we can predict the pendant or outcome variable again in the pulldown menu if we try to make a dependent or outcome variable heart rate variability this logistic regression algorithm tells us it's not going to do numeric classes now let's run it again and this time we get 98.7 5% prediction accuracy on bottom we see all 44 unsuccessful measurements were predicted correctly in 35 of 36 successful were predicted correctly for this logistic regression algorithm removing data points during pre-processing improved the algorithm which is not always the case and this was another one of those first time seeing it in Weka lessons some algorithms like this it's inefficient to just throw all the data that you can at it whereas other algorithms like j48 decision tree it's the more efficient thing to do let's click classify choose trees j48 which is a decision tree and again I asked myself hypothetically what do I want this decision tree formula to try to predict and find out for me well since logistic regression did not work with numeric classes I figured I try to predict a numeric class this time so again I went to the pulldown menu and said let's use decision tree to predict heart rate variability that is if I give this decision tree algorithm all six data points how accurately can it predict the seventh one or candidate at all as that logistic regression algorithm this j48 decision tree algorithm does not work with numeric classes j48 does work with nominal classes and since I had three I ran each in decision tree just to get a little more familiar with Weka first I did health grade and as you can see it predicted 100% accurately to see how I clicked visual Street he looked at all six data points and picked heart rate variability as the most relative for a prediction based on one question in fact was the HRV above below or equal to 69 point five it predicted 100% of health grade was successful or unsuccessful a small of anything to is that I graded success if it was above or below 70 and the decision tree here says you actually didn't have any 69.6 to 70 so sixty nine point five is your actual line in the sand to classify success and so that gave me a clearer way of thinking about a metric next I put pattern sleep in the pulldown menu and again decision sure you got one hundred percent I clicked a visualized tree and it showed it related day ID most to the outcome variable was the day ID more than ten it could predict based off this one question but the third nominal class variable was not as simple to predict sequence ID was predicted only eighty six point two five percent of the time visualizing the decision tree shows what the model settled on the formula started with the hours awake variable further down it added the day ID variable but at certain points I saw uncertainty the tree is reflecting the confusion matrix let's look at the sequence ID variable to see why it looks nice and segmented but it has overlap I took four hard measurements each day first as soon as I woke up second about two to five hours after that third about two to five hours and after that and fourth two or more hours after that before I went to sleep so the second sequence can have a five hour the third can have a five hour a third can have a seven hour the fourth can have a seven hour so now you see why it's so II it's so easy to predict the first one and it's so difficult to predict the middle ones and along those lines decision tree showed me another introductory Weka lesson after 18 days it was only 83 percent accurate I saw this decision tree algorithm improved to the 86% with 8 more instances so we can assume with more instances or more attributes this tree will have more potential branches and get more and more accurate next up was neural network click classify choose functions multi-layer perceptron which is a neural network you see multi-layer perceptron link neural network again to get my feet wet with neural network I step back and consider a hypothetical I asked what could I want neural network to predict or find out for me I chose two things first predict the complex sequence ID variable which gave the decision tree trouble second I wanted to predict a numeric class HRV again because the first two algorithms only worked with nominal class and again I asked if I should remove some data points like in logistic regression or leave them all like in decision tree it seemed that the more data points multi-layer perceptron had the better so I left all seven in I looked at test options and chose percentage split this time testing it 66 percent split means it builds its initial model or formula using 53 of the 80 instances which again will heart measurements that I took and after the initial model is built it runs it on the last third for 27 instances to test its accuracy so let's see how accurate the algorithm was in predicting sequence ID on that fresh set of 27 instances that it held out for testing what was 89% as you saw and the first sequence it got them all right but as and the middle ones there's uncertainty and as you could see the last one it got them all right I looked up at the model that it built and didn't quite understand it but it was helpful nonetheless to see now a third unique algorithm in Weka that can be used for data analysis I was just trying to get initial experience with Weka and thus was happy to see multi-layer perceptron a neural network algorithm that can handle a numeric class such as HIV I click start look at the results a noticed numeric class did not have an identical summary as nominal class remember how it got 24 of 27 correctly class instances or 89% well here with numeric it seemed less correct versus incorrect instead of saying it got 0 out of 27 correct the emphasis seemed more on how close it got error statistics were in the summary but here I went to more options so I could output prediction and see accuracy in another way I reran it an output prediction showed what this neural network model predicted for those 27 side-by-side with the actual values my first thought was this is the kind of thing I can paste into a spreadsheet for anyone to understand say needing to ask are these predictions close enough because here's where we're at with the model and I thought of them without knowing what root means square error is saying yes I see a close enough accuracy right here between these two it's useful now or maybe them saying no it's not useful now finally the neural network to visualize the formula I turn the GUI on by right clicking where it said multi-layer perceptron so properties GUI make true okay to run it again I click start I thought this visual is pretty self explanatory and you could see why they call a neural network and on the bottom those are events with more events this algorithms error rate decreases over time so I can plug in 50,000 events for example and it'll get smarter last I looked at one more type of algorithm and Weka called support vector machine classify choose functions see there's s mo and s mo reg both are based on support vector machine if you look at the properties of them and you'll see that even though both are based on support vector machine there are different algorithms let's choose s mo reg to predict a nominal variable here we see the reversal before this algorithm on accept nominal class the will accept numeric class another introductory lesson this doesn't mean what's nominal class can ever work with s mo reg I may be able to edit something nominal class just by opening the data file and representing the data differently in saving the variable this time as a numeric class for example or I may want to run one of the many filters in Weka as a datum I know you spend a bunch of time changing data in ways that can make an algorithm more efficient those are some examples again of what's called data pre-processing and data pre-processing is a big part of using markup now let's use the training set as is without any cross-validation to see what it looks like let's also check output predictions take note of the original order being intact and left as is now let's run 10-fold cross-validation here are ten consecutive one through eight one through eight one through eight for my 80 instances let's compare their prediction accuracy the 10-fold cross-validation the mean absolute error is 2.75 and the root mean square is 3.5 how's that compared to the test without cross-validation in the result list i clicked it less error but now i saw why it's less valid as a predictive model this demo was made with first-time and beginner weapon users in mind I hope it provides them a few things which make comprehending Weka easier and faster have fun using Weka thank you

.....

| File Size: 52.6 MB | Downloads: 149278 |

| Added: January 31 2022 |

User rating: 4.1

1636

4.1

|

|

Company: Weka Team

-

-

|

Supported Operating System: Win All |

User reviews

November 18, 2017, Domenico think:Weka के सीरियल नंबर के लिए धन्यवाद